#include <src/NeuronalAgent.h>

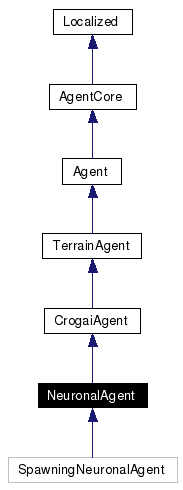

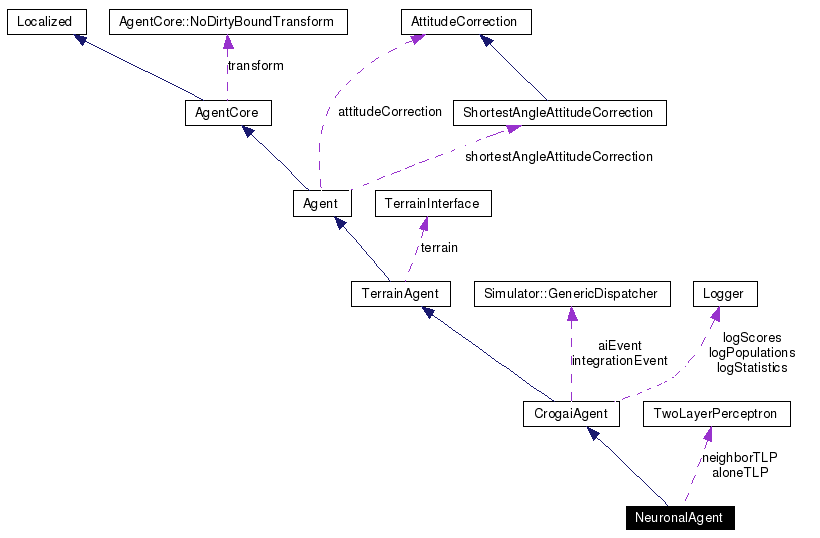

Inheritance diagram for NeuronalAgent:

First, train it to mimick another agent with an explicit AI. This will give a non-random starting point for the later evolution, and at least gives this agent a chance to survive. Then, use a genetic algorithm to evolve of population of neuronal agents into something else.

If you're really lucky, the offsprings may be more clever than the original AI, and escape into the Internet to take over the world. Please mail me if this happens :)

For each neighbor agent, the network is run with the following inputs. Parameters marked 'c' are common to all neighbors computations, but provided nonetheless on a per-neighbor basis. Parameters marked 'n' are neighbor-dependent, and set to respectively 0,0,0,-2 when there is no neighbor. Parameters marked 'e' are only used during the evolution phase, and not the training phase (TODO: work in progress). They represent the acquired knownledge part of the agent, and are reset when creating a new offspring.

The network outputs 'n' and 'c' are all coefficients to apply to a vector. See Agent.h for steering vector definitions. All neighbor-dependent vector are set to 0 when there is no neighbor. The network outputs 'e' are scalar values only used after training (not part of the error function)

The neural network is run for each neigbhor, then the computations are aggregated according to the following policies. For each result vector:

Once a steering force is computed for each output, the forces are then once again aggregated according to one of the following policies for the final steering force:

Average should in principle give smoother results and allows training all network weights. Winner take all is useful for more natural results (ex: avoid the closest), to decide only one clear action in a case where multiple choices could be made, instead of an average and not so good result. Unfortunately as gradients are 0 for unused weights in this case, the training phase may not go so well. Averaging both neighbor computations and result vectors is thus the default, but you're encouraged to play with other policies.

During training phase, the Error function is computed as follow: E = 1 - (ResultForce dot TeacherResultForce) where ResultForce is the output of all previous computations, and TeacherResultForce is what the teacher would have done in the same situation.

Note: The training phase is stateless, without memory, so the neural network can be registered on many teachers at the same time to get data from many different situations

Public Types | |

| enum | AggregateStrategy { Average, WinnerTakeAll, User } |

| Strategies to aggregate the steering force vectors from each neighor computations: + For each steering force resulting from a steerForXXX behavior: - Average: average vectors from all neighbor computation contributions to get final steering vector - Winner take all: get largest norm output*force vector as the only one for this steering force + For the final steering force - Average: average contributions from all individual steering results, computed as defined above - Winner take all: get largest norm individual steering force, computed as defined above, as the only one. | |

Public Member Functions | |

| NeuronalAgent (int nhidden, int specie, AggregateStrategy neighborsStrategy=Average, AggregateStrategy forcesStrategy=Average, bool addToGlobalAgentList=false) | |

| virtual void | addTeacher (CrogaiAgent *teacher) |

| virtual void | removeTeacher (CrogaiAgent *teacher) |

| For learning subclasses to be notified when this agent leaves the game. | |

| virtual void | stopTraining () |

| stops training and remove us from all teacher's students lists As soon as the training stops, this agent is added to the global agent list and may play the game! You can safely position the agent and prepare its avatar before calling this function, it won't interfere with the training or other agents so long as this function is not called. | |

| virtual void | setMutationParameters (double mutationRate=0.0, double mutationJitter=0.0) |

| Specifies the parameters for the spawning "updateChild" function. | |

Public Attributes | |

| AggregateStrategy | aggregateAgents |

| See comment above about policy: - aggregateAgents: For getting each individual steering force from neighbor computation contributions - aggregateForce: For getting the final steering force from each individual steering force. | |

| AggregateStrategy | aggregateForces |

| See comment above about policy: - aggregateAgents: For getting each individual steering force from neighbor computation contributions - aggregateForce: For getting the final steering force from each individual steering force. | |

| TwoLayerPerceptron | neighborTLP |

| Our brain. Not very much, but more than most living things in the real world out there! | |

| TwoLayerPerceptron | aloneTLP |

| Our brain. Not very much, but more than most living things in the real world out there! | |

| bool | monitorError |

| During training, error can optionally be computed and displayed. | |

Static Public Attributes | |

| static const int | ninput = 10 |

| number of inputs and outputs are common to all agents. | |

| static const int | noutput = 10 |

Protected Member Functions | |

| virtual void | updateChild (CrogaiAgent *child) |

| Subclasses may call this function when spawning to set base class parameters. | |

| virtual void | displayError (double errorValue, bool alone) |

| Dislay the argument as the current training error for this network Default is to display it on cout, but subclasses may change this behavior. | |

| virtual void | updateAI (const double elapsedTime, const double currentTime) |

| Perform AI update: The position is constrained to be above the terrain, and attitude is updated accordingly. | |

| virtual void | learnAI (const double elapsedTime, const double currentTime, CrogaiAgent *teacher) |

| Students registered on this teacher should have overloaded this. | |

| virtual void | learnOrUpdateAI (const double elapsedTime, const double currentTime, CrogaiAgent *valueProvider) |

Protected Attributes | |

| double * | input |

| avoid creating input and output parameters each time | |

| double * | output |

| double * | gradout |

| osg::Vec3d * | outputForces |

| osg::Vec3d * | aggregateOutputForces |

| double * | outputForcesLenCache |

| std::list< CrogaiAgent * > | teachers |

| Our teachers. Unused after the training phase. | |

| OpenThreads::Mutex | teacherAccessMutex |

| double | mutationRate |

| setSpawnParameters | |

| double | mutationJitter |

| setSpawnParameters | |

Static Protected Attributes | |

| static const double | noutputInverse = 0.1 |

|

||||||||||||||||||||||||

|

|

|

||||||||||||||||

|

Students registered on this teacher should have overloaded this. This is the function called during teacher updateAI to notify all students. It does nothing by default, and is never called if your object is not registered as a student. Reimplemented from CrogaiAgent. |

|

||||||||||||

|

Specifies the parameters for the spawning "updateChild" function. These parameters are inherited by the child, so setting them once for the first generation is enough.

|

|

||||||||||||

|

Perform AI update: The position is constrained to be above the terrain, and attitude is updated accordingly. Two new internal variables are set for subclasse, which can be checked together with other state variables (position, attitude, etc...)

Subclasses should call this function at the beginning of their AI update routine Reimplemented from TerrainAgent. |

|

|

Subclasses may call this function when spawning to set base class parameters. Each agent has an initial genetic payload consisting of neural network weights, which are NOT its current weights. Indeed, each agent can use its memory and message communication skills during its life, and can also learn and train its network during its life. A new child inherits only the initial weights as genetic information, and does not inherit the parent learnt changes. At the very begining of your program, the first parent may use a teacher to get a reasonable AI and have a chance to survive. As time passes, the new children can evolve these initial weights using genetic algorithm and the hope is that they get better than the initial parent. Error monitoring, aggregate policies, and spawn parameters are transfered to the child unchanged. Reimplemented from CrogaiAgent. |

|

|

During training, error can optionally be computed and displayed. This class display it on cout, but subclasses may change this behavior. See the displayError function. monitorError is false by default |

|

|

number of inputs and outputs are common to all agents. They are respectively the number of parameters for the AI algorithm and the number of steering forces to get a coefficient for. |

1.4.1

1.4.1